Baxter Shell Game

Written on December 15th, 2016 by Elton Cheng

Overview

Group Project was completed for ME495: Embedded Systems in Robotics

Group Members: Elton Cheng, Adam Pollack, Blake Strebel, Vismaya Walawalkar

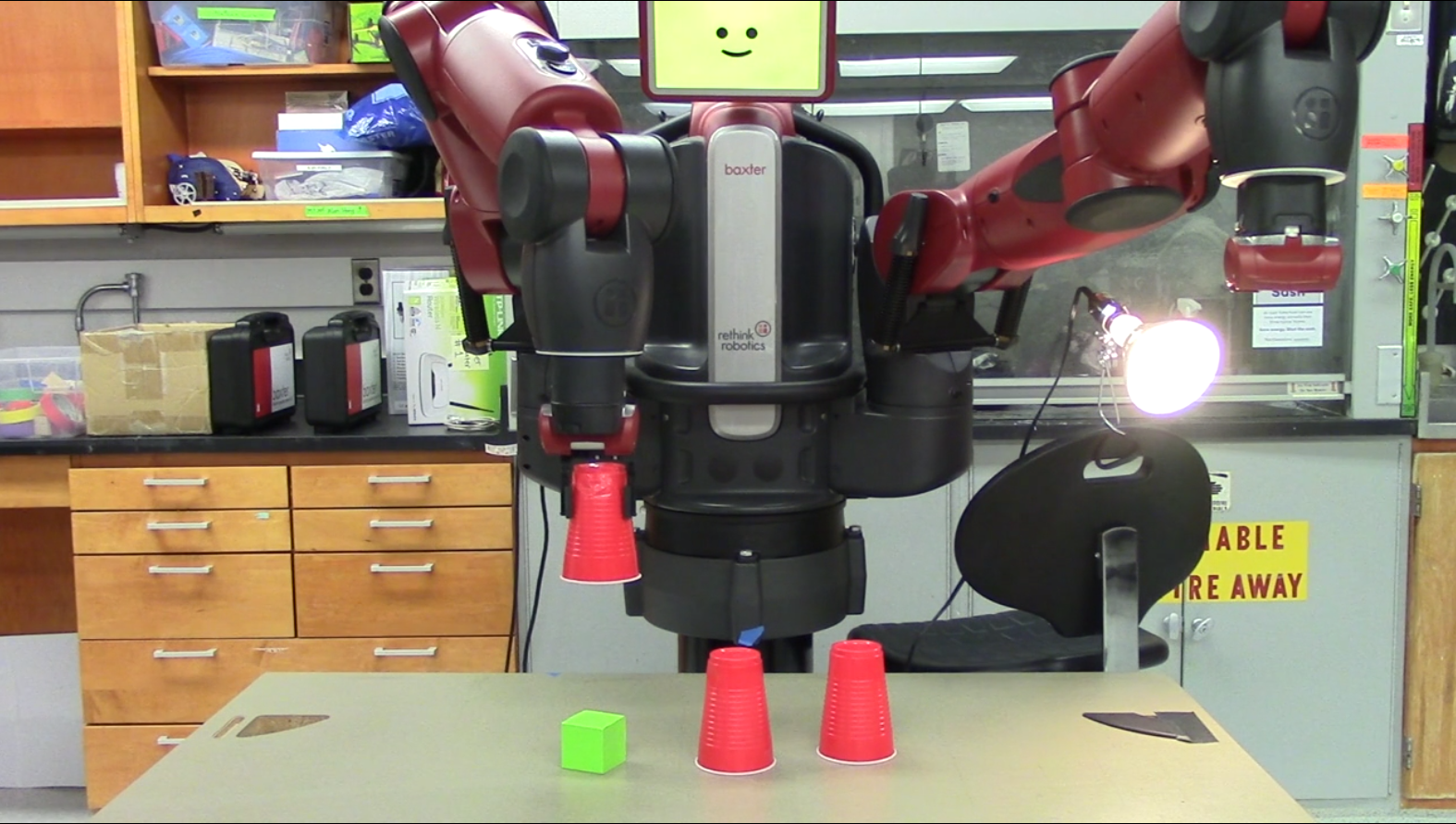

The purpose for this project was to have Rethink’s robot, Baxter, be programmed to “pick and place objects”. For this project, our group chose to have Baxter play the Shell Game.

Two main components that were created for this project were the Computer Vision (CV) portion of tracking the correct cup holding the item and the Motion Planning portion for Baxter’s arm to move to and pick up the cup.

Computer Vision

For this portion of the project, we used OpenCV for color detection of the 3 identical red cups, seen on the picture on the right, to track and identify each cup individually. The item, the green colored cube, is also identified and tracked with this same process.

The center of each circles marking the cups (CoC) and cube are measured and kept track of. When the cube “disappears” from camera view (when it is hidden under a cup), we assume that the closest CoC nearest to the center of the cube is the cup that is hiding the item. If Baxter is able to keep track of this cup during the duration of the shuffling process, it would publish out the center position of the cup that contains the item.

Motion Planning

For this portion of the project, we used Baxter’s built in Inverse Kinematics Solver (IK Solver) to find a pose for Baxter’s arm to get to a position, and MoveIt! to create a motion plan from one pose to another.

The step by step process of this is once the shuffling process is complete and we know the position of the cup holding the item, we must convert the position of the CoC from 2D pixel coordinates to 3D real world coordinates. This was done using the image_geometry ROS package.

Once we have the real world coordinates, we then use Baxter’s IK Solver to solve for a pose of the arm at that coordinate. MoveIt! is then used to move from its current pose to the desired cup pose. This process is continued during the drop, pick, and grab portion of motion.

Conclusion

Overall, the project was a success, and our demo video of it can be found on the image link below. Several issues that did come up during this project that I would have liked to have implemented better is how the CV for recognized the top of the cup worked, make the CV more robust, and improve the use of the IKSolver and motion of Baxter.

The initial idea for cup recognition was to only look at only the top of the cup. However, because we used completely red cups and did not cover up the sides of the cup, the circle marking the cup was not circling just the top if the cup was not at the center of the camera. Because of this, the pixel to 3D coordinate transformation was always off and some hardcoded offsets had to be implemented to account for this.

Another issue with the CV portion was how light sensitive our project was. If it was too bright, the green cube could not be seen. Too dark and the red cups would not be as visible. Despite this, as long as the robot is in the same environment during testing, it would accomplish its task.

Finally, the built in IKSolver for Baxter was not as reliable as we hoped it would be and would give many problems when trying to move it to certain poses. This problem was resolved by hard coding and measuring boundaries and restrictions that were definite in there being a solution for the IKSolver. I believe that this portion of the project could be improved upon by moving to a desired pose using velocity control rather than positional control, as there would not be a need for an IKSolver to be used.